Thoughts about home labs and combine AMD Threadripper with VMware

In the last couple of weeks I have been busy with building a new home lab environment. In this blog I wanted to go over what kind of hardware and software I chose for the lab en why. And why you should consider building your own lab. I hope this blog will help you with your home lab if you are looking for a new environment.

In the last couple of weeks I have been busy with building a new home lab environment. In this blog I wanted to go over what kind of hardware and software I chose for the lab en why. And why you should consider building your own lab. I hope this blog will help you with your home lab if you are looking for a new environment.

First off, why is it important to have a home lab?

Well, for me it helps with writing articles. If I write about a new technology I want to have tested it on my lab environment. Also, I create a lot of scripts and scripting in a customer’s production environment can be difficult. And if you use your own AD for scripts you kind of know what the result must be of some scripts. But when I first created my very first home lab it was just because I wanted to do everything myself. Back then I worked as a Citrix administrator and someone else had already setup the AD, DNS, DHCP, File server, SQL and complete Citrix environment. So doing this myself for the first time in my own lab and using Microsoft and Citrix course material, really helped me understanding the environment and as a bonus helped me get certified. This last reason is why I think that everyone who is an IT administrator should have their own lab. Of course a lab these days can be a high spec laptop or Azure credits. You don’t need a complete server in your home anymore!

Why I chose to buy new hardware for a lab and not use Azure?

For me the lab is something that runs 24/7. I use the VDI system on the lab as my own workspace. The DHCP and DNS servers also provide the DNS and DHCP to my complete house, wifi etc. So I did a calculation on running 24/7 on Azure and the budget I had for the new lab, $2000, would have been spent on Azure in 6 months with a minimal environment. So I decided to buy hardware again.

The hardware, and why this hardware?

So the hardware I bought is AMD workstation hardware and it’s the following:

AMD Ryzen Threadripper 1920x (12c/24t 3,5Ghz) CPU

The CPU I bought is from AMD. This is the first time I bought an AMD CPU for my lab environment. (my previous labs where on Intel Xeon). So why AMD? Well, Threadripper delivers an incredible amount of cores (up to 32) and thread (up to 64) and it does this at a high clock speed (3Ghz +). So after reading on forums that VMware vSphere works with Ryzen and Threadrippers CPU’s from version 6.5 u1, I leaned towards it. What pulled me over the line was the fact the AMD just launched their second gen Threadripper CPU’s on the market. This slashed the prices of their first gen CPU’s in half. So I bought the first gen 1920x with 12 cores and 24 threads at 3,5 Ghz. My old lab environment had 2 x 6 core Xeon (24 threads total) at 2,2 Ghz. So, this is a nice speed bump. It also gives me a great upgrade path because the second generation Threadrippers use the same socket as the first gen and are supported by the motherboard. If needed I can upgrade to the 2990wx an 32 core 64 thread 3Ghz clocked beast!

ASrock Taichi x399 motherboard

I choose the ASrock Taichi x399 for a few reasons. The first being it’s the most workstation like motherboard in the x399 series. Most motherboards are focused more on gaming. The x399 comes with two intel NIC’s which is great. It also has 8 SATA ports, 3 m.2 ports and a build in RAID controller. Another reason was that I found out on forums here (Overclockers.uk) that using this motherboard in combination with vSphere 6.7 u1 works without adding any drivers to vSphere. So this board is plug and play for virtualization.

Vengeance LPX 2 x 64 GB DDR4 Kit 2666mhz 8 x 16GB 128GB total RAM

For the ram I chose the vengeance LPX kit because this memory is competitive priced and is half the height which gives enough clearance for the CPU cooler. I went with 8 stick of 16 GB (2 QUAD Channel kits). This gives me 128 GB RAM, this is the maximum supported RAM for the motherboard. That limit is important to keep in mind.

Geforce GT710

Because AMD Threadripper doesn’t come with a built in GPU it made me choose a graphics card. For this I chose the cheapest one available at the moment, the Geforce GT710.

Samsung EVO 970 1TB Plus

NVMe drives are great for using with virtualization because it’s super fast and the 970 Evo plus is one of the fastest PIC-E 3.0 drives out there with more than 3000MB\s throughput. I made the choice to buy just one drive. I’m going to use it for caching disks and Citrix MCS. For fileserver etc. I will be using my Synology NAS (with redundancy). If you do need redundancy buy two drives.

Coolermaster v750 Gold PSU

The coolermaster v750 gold power supply is a solid, well tested power supply which is compatible with Threadripper because it has an 8 pin CPU power lead. The 750 watts is plenty capacity for the system.

Coolermaster H500 case

The coolermaster H500 case is a big and roomy case with lots of room for cable management. There are also two big 200MM fans in the front and a 120MM in the back. This gives it enough airflow. But more importantly, all the fans are behind either a dust filter or fine mesh which stops the dust. Because the lab runs 24/7 you don’t want it to clog up with dust.

Coolermaster Wraith Ripper cooler

The AMD Threadripper CPU doesn’t come with a CPU cooler. Luckily there are a few options to choose from. My choice is the Wraith Ripper, for three reasons. One, it is an air cooler, generally in workstation you want to skip the watercoolers because they are not as reliable as the air coolers over longer time. Two, it’s developed together with AMD and is the official cooler for the 2nd gen Threadripper’s. And three, it has the most easy installation of all the coolers. You just need to thread four screws.

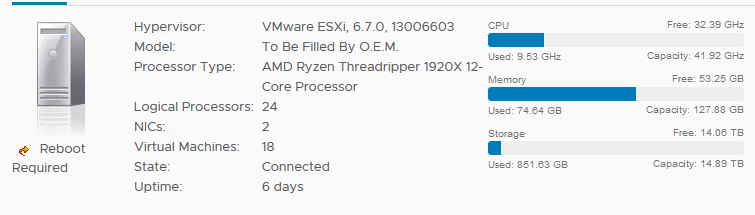

So that is the complete hardware list and my reasoning behind this setup. The total price was 1850 Euro’s so around 2000 dollars and that was my budget. Here you see the total system running:

And a nice compliment on the setup by AMD.

The software

Well, the software was a lot easier than selecting all the hardware. As a VMware vExpert and a fan of PowerCLI, I of course chose for vSphere (ESXi) 6.7 u2 with vCenter 6.7. Both worked perfectly out of the box with the system. I didn’t need to add any extra drivers. I created an installation stick with Rufus and installed vSphere on an extra USB plugged into the back. As soon as the system was installed and rebooted I saw both the network adapters and the NVMe drives and SATA ports etc.. Mounting my Synology VM lun to the new home lab was easy and transferring the VM’s was done in a day. I did take extra time to create new domain controllers and file servers. I went from 2012R2 to 2019 core. And I also decided to completely create a new Citrix environment and start using MCS instead of PVS.

Power consumption

Power is not cheap and in the Netherlands where I live the government has heavy taxes on power. So leaving a system on 24/7 can be expensive. So how is the power consumption of the lab? Well, the system with all my VM’s turned on uses around 140 Watts of power. Together with my Synology and Sophos UTM firewall the complete system uses around 180 Watts. This seems a lot but my last lab environment used around 800 watts. This means a saving for me of 620 Watts and the old hardware gave off a lot of heat which I cooled with fans costing an extra 100 watts. So the saving in the summer is 720 Watts. I can’t wait for my next power bill, I will be smiling 😊

Why not just buy old server hardware ?

Another way to go with home labs is buy old server hardware. If you want 24 Threads and 128 GB of RAM and you buy a second hand DL380 G8, you only have to spend around 1000 Euro’s. Or maybe you can pick up an old server from your work for cheap. But as someone who had server hardware in his house for almost 10 years, I cannot recommend it. Server hardware is stable and runs really well 24/7, but it’s loud and as you can see under Power consumption, it needs a lot more power. Which can be really expensive long term. And the last thing is of course speed difference. You can buy a AMD Threadripper or Intel i9 with clock speeds over 3Ghz and NVMe support easily. But finding a cheap Xeon above 3,0 GHz is difficult. All that being sad, if you can get a server for really cheap and you only turn it on when testing or developing it can be a good option.

Alternatives

I have built a AMD Threadripper workstation system as vSphere server. But what are some good alternatives? Of course you can build the same kind of workstation with an Intel Core i9 or Xeon W CPU. But you could also check out the new Intel i7 NUC systems like the nuc8i7beh2. It’s around 500 dollars and it comes with a quad core 8 threads i7 at 2,7 Ghz. And it uses even less power. One big withdrawal with the NUC is RAM support. It can only handle 32 GB, which is way too less for a complete AD, DHCP,DNS, FS and Citrix system. But they are cheap, so you could buy multiple and use a Synology as shared storage between them. Another option are the super micro super servers like the E2-800D. It’s just a little bit bigger than the NUC and it’s also power efficient. But it comes with a 6 Core 12 Thread Xeon at 1,9 Ghz with support up to 128 GB RAM.

Do you have any good home lab tips ? Please leave them down in the comments.

I hope this was informative. For questions or comments you can always give a reaction in the comment section or contact me:

I’m actually curious, why did you use the EVO Plus instead of the Pro version?

The Pro version lasts longer than the EVO version.

Hi Henk!

Because I use the disk for cache and mcs I choose a cheaper one. And the evo plus is midway between the evo and the pro.

Nice article Chris,

I was in your shoes a few months back where I was trying to check the cost of some instances in AWS and Azure and it was a bit prohibitive in terms of costs. I ended up buying a HPE DL325 G10 server with AMD Epyc 7401P with 24C/48T and 32GB DDR4 as standard. it was 1450$ and had to buy a 1TB SSD which ended up way more cheaper than the “cloud” offering, plus I have the horse power I need.

I am using a vSphere HomeLAB comprised of three Intel NUCs 8i5BEH.

The NUCs do support 64GB RAM each (using 2x 32GB Samsung SO-DIMMs).

Each NUC has a Samsung 870Pro NVMe as caching tier, and a Samsung 860Pro SSD as capacity tier, building a 3-node VSAN cluster.

That’s cool that you can use 64 GB. Strange that intel doesn’t specify it.

William Lam did d nice write up about 64GB RAM support in a NUC here: https://www.virtuallyghetto.com/2019/03/64gb-memory-on-the-intel-nucs.html

Nice article, it is being very usefull for me.

I want to buy a machine for the same work, virtualization.

But I have a doubt, why do you choose this moderboard instead “GIGABYTE X399 AORUS Gaming 7 “, I heard problems with both and I’m not sure wich is better for virtualization, could you help me?

Hi Luis,

With vSphere 6.7 u2 there are no problems with the TaiChi x399 and the TaiChi has 2 nics that’s why I made choose the TaiChi.

hope this helps !

gr chris

yes, thanks a lot for your fast answer!

I have saved a lot of time configuration my new pc 🙂 thanks again

Hello,

very nice write up chris, i just have a question, do you think the new families 2920x, 2950x and 2970x will work fine with the vsphere 6.7, i am thinking about building a small lab for vmware dcv certs, any comments/help are much appreciated,

cheers,

As far as I know they should just work. The support is more the motherboard so you may need too do a bios update to support new CPU’s.

Thank you for the awesome article Chris!

Hello Chris,

Also a dutchie ;-). I’ll do like your setup. I’ll thinking about a new X299 platform. Same thoughts, running VMware and develop. But reading your post the X399 setup will save me money.

My purpose, is to run VMware Workstation (always do with VMUG Advantage) instead of a physical lab. It is more flexible and as written I’ll run my labs short. The thing is, the 64GB is not enhough in my current system so need atleast 128GB and like to have 256GB with 24 threads. So a new build.

Thank you for sharing the X399 setup. It is in my thoughts.

Thank you for the post-Chris, I was hoping you could help me with a question. I recently got promoted at work to network admin and wanted to start to focus more on the security side of the house. My long term plans are pentesting and network defense. My goal is to build a similar system to what you have put together here. The question I have is I want to go all NVME with external ae nas capacity. Would this motherboard support 3 to 4 nvme drives all at once? reading online this information is limited and hope you can shed light.

Thank you very much

Yes the board supports all the Nvme slots to be filled at once. I don’t know if the onboard raid controller can handle them. If you want to do raid.

Ji

Thanks for this great post, I’ve just built a new server based on your great specs 🙂 I’m wondering if the Samsung EVO 970 1TB Plus can be used as a datastore on the current ESXi 6.7?

Hey that’s awesome ! You can use it as a data store ! Good luck!

Hi Chris

Thanks for the quick reply and confirmation that it works. I’ve always been a fan of AMD but their semi-demise a while ago was a disappointment but their resurrection has been spectacular. I’ve been considering moving my two esxi servers to AMD and your post was the spur I needed and what a monster it is – I’m looking forward to an interesting Christmas. 🙂

I’d like to wish you and your family the best for the holiday and New Year.

Many thanks again for the help.

Bill

Hi Chris ,

Very informative article , Thanks for the effort and sharing your system details.

Can you give us (Vengeance LPX 2 x 64 GB DDR4 Kit 2666mhz 8 x 16GB 128GB RAM) exact part code /Model number ? or URL

I bought two of these https://www.megekko.nl/product/2046/955562/DDR4-Geheugen/Corsair-DDR4-Vengeance-LPX-4x16GB-2666-C16-Geheugenmodule

Thank you for your help .

Hi Chris,

Just wondering, did you already tried EXSi V7 on your homelab?

Thanks, Ron

No not yet !

Thanks for the post Christ, I am planning to order the same parts you listed for my home virtual network lab. I have a question regarding the RAM memory, the specs on the Threadripper CPU supports 8 memory channels and your configuration for 128GB was (8 x 16)GB modules. I am planning on getting 128GB , but I wasn’t sure if I should get 4 RAM modules at 32GB each and leave the remaining 4 memory slots on the motherboard free for possible future upgrades. If I do go with the (4×32) GB configuration and running VMWARE EXSi, do you know if the CPU speed/performance access to RAM will drop b/c it is only connecting to 4 memory channels instead of the 8 channels?

Thanks,

Victor

Hi Victor,

Great to hear that I inspired you to buy the same setup. My setup is still running great with out any issues.

As far as I know the thread ripper gen 1 and 2 are quad channel memory CPU’s. So there is no difference in performance with 8 or 4 sticks !

Good luck !

Glad to hear that your setup is still running great.

Thank you so much for clarifying and all your help!

Hello Chris,

I am also one that is thankful for your post. My last ESXI build was performed in 2012 with a ASRock Z68 Extreme3 Gen 3 board and it has served me well. Your build suggestions fit quite well my needs. I made a few modifications but overall your suggestions where much appreciated.

Here are the main items for my build:

ASRock X399 Taichi sTR4 AMD X399 SATA 6Gb/s ATX AMD Motherboard.

AMD 2nd Gen Ryzen Threadripper 2950X, 16-Core, 32-Thread, 4.4 GHz Max Boost (3.5 GHz Base).

CORSAIR Vengeance LPX 64GB (4 x 16GB) 288-Pin DDR4 SDRAM DDR4 2666 (PC4 21300) AMD X399 Compatible Desktop Memory Model CMK64GX4M4A2666C16.

Noctua NH-U14S TR4-SP3, Premium-grade CPU Cooler for AMD sTRX4/TR4/SP3.

ZOTAC GeForce GT 710 1GB DDR3 PCIE x 1 , DVI, HDMI, VGA, Low Profile Graphic Card.

Highpoint Superspeed USB 3.0 PCi-Express 2.0 X4 4-Port Card Highpoint RocketU 1144A

LSI MegaRAID SAS 9361-8i & Intel RAID Expander RES3FV288

I wish to highlight the need for low profile memory with the Noctua NH-U14S cooler, if all the bank are going to be used. I am still at the early stage of my testing. I installed both ESXI 6.7 Update 3 and ESXI 7.0 (VMware-VMvisor-Installer-7.0.0-15843807) via the LSI Card and onboard, everything seem to work including path-through. This motherboard has two Intel 1211AT Gbit NICs that are not listed in the VMware Compatibility Guide for ESXI 7 but they seem to work. See: https://www.vmware.com/resources/compatibility/detail.php?deviceCategory=io&productid=37601

Thank You again and all the best,

Philippe

Hi Philippe,

Thank you for sharing your experience and machine specs.

I haven’t tested yet with 7.0 so good to hear that it works !

Thank you and enjoy your setup!

Thanks for this Guide.

Can also confirm that my Build with a 1920x works with 4 x 32GB Corsair 3200 LPX DDR4 DIMMs and 4 x 16GB Corsair 3200 LPX DDR4 DIMMs on the Asrock X399 Taichi. (Sure 8 x 32GB sticks will also work just waiting for 4 more to come on sale somewhere.)

VMware ESXi, 7.0.0, 16324942

24 Threads and 192GB Ram!!!

Good to hear!

Chris, have you had any stability issues with your setup?

I’ve got 3 hosts in a similar setup. 2x 1950X and 1x 1920X. I’ve got 2 Asus X399 Prime motherboards and 1 ASRock X399 Fatal1ty (just a bit more well featured than the Taichi). The ASRock used to be a Windows box and when I moved to ESXi 6.7, I picked up the Prime boards because I had read they had good compatibility from forums as well.

I slowly moved through all 6.7 releases and then to 7.0. Sadly, one thing Ive come to live with the whole time is all 3 hosts crash to PSOD about once or twice a month on average. It isnt really too problematic because they host lab VMs, but I would certainly hope to have them stable. The 1920X even has ECC memory.

I’m just curious if your setup is 24/7 stable for long time frames. Its been hard to find information about threadripper compatibility beyond the first initial wave of people getting PSODs prior to 6.5U1. Originally I liked Threadripper for the value and per socket licensing, but in 7.0 VMUG advantage they give you up to 16 CPUs.

Hi Sorry for my late response. My setup is reasonably stable I do expiernce crashing but only once in 6 months so 2 times a year max. So that’s not to bad..

Adam I have almost an identical setup, with repeated crashes couple times a month. ASRock x399 tachi/ASUS Prime x399 x2/1950,1900,1920. I have never had more than 1 host crash at a time, always single failures.

lmk if you want to discuss

Hi Chris,

Also thanks from my end, your post made me decide to go the same route and order (almost) the same hardware for my LAB environment.

Unfortunately I’m experiencing the same amount of crashes as Mikeal mentioned. Current count for this month is on 3 already, but I really don’t want to go (back) to a Windows Server installation. First because ESXi is educational for me, 2nd because I’m really used to the environment and it’s possibilities (EVE-NG, various VMs, etc.)

I would be really really happy if I was only getting 1 or 2 crashes a year, and wondering why I’m experiencing many more. I do have to say that I’ve been running ESXi 7 from the start and have been upgrading after every update/patch (and I’m aware of the issues with U3 but was already having PSODs in 7.0.2).

Can you tell me the BIOS options and settings you have enabled for your setup? Maybe there is a difference.

Also, since I see you’re a vExpert, do you have any experience with Runecast? I’m wondering if I could benefit from it, but since I’m no vExpert, I’ll have to pay for a subscription.

Bedankt! 😉